Technology & Academic Integrity

From 0% to 97% AI Detected: Is Turnitin Losing Its Reliability?

Published on October 16, 2025 by Joselito Bacolod

As a Master's student and a professional tutor, I live and breathe academic integrity. Tools like Turnitin are not just utilities; they are the gatekeepers we trust to maintain a fair and honest learning environment. But what happens when the gatekeeper suddenly becomes unreliable?

Today, I encountered something that shook my confidence in one of the most widely used AI detection tools in academia. It’s a story in two pictures.

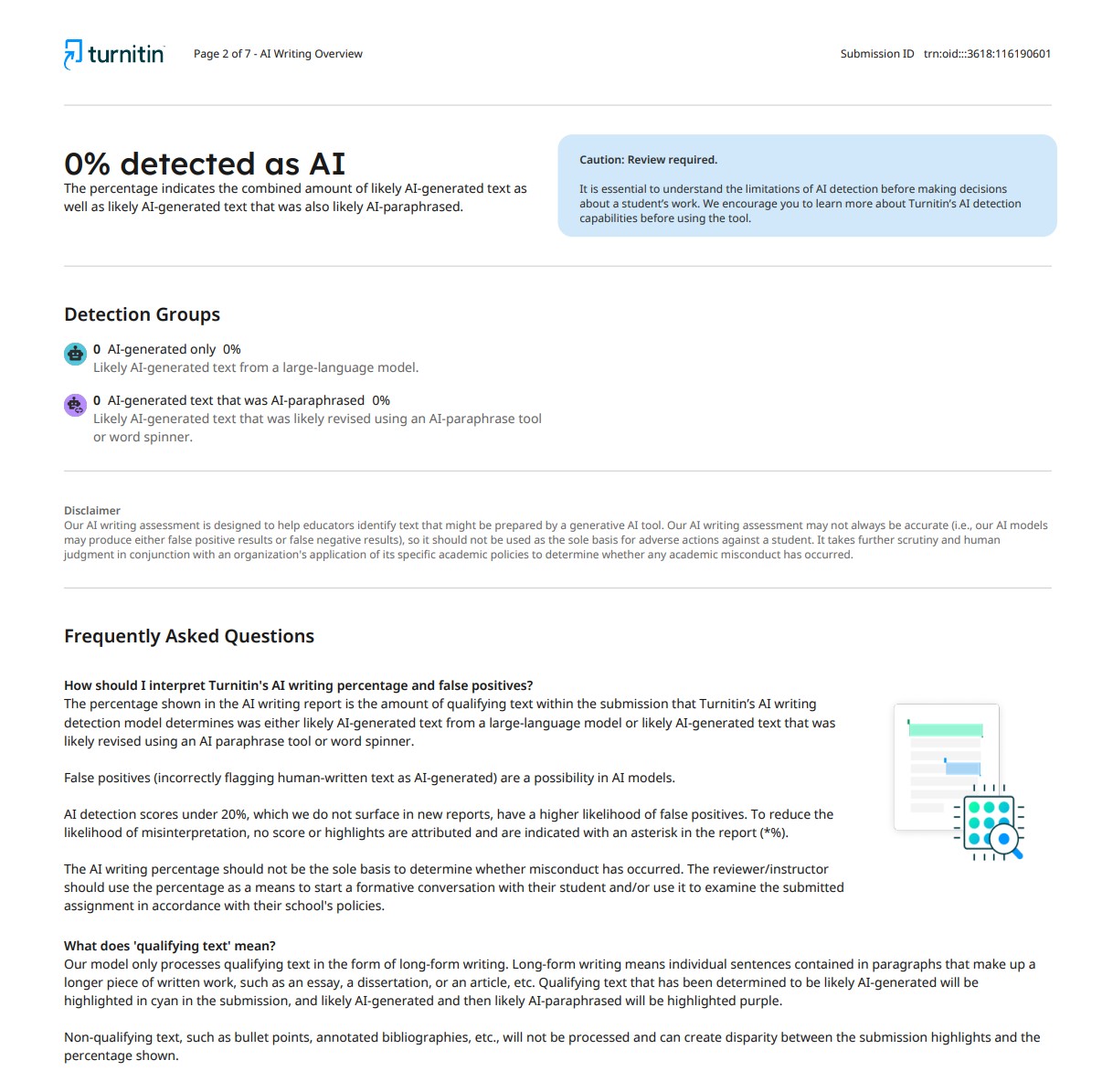

The First Scan: October 10, 2025

Just last week, I submitted a document—my own original work—to Turnitin for a routine check. The result was exactly what I expected:

A clean bill of health. This is the baseline we all aim for with our original writing.

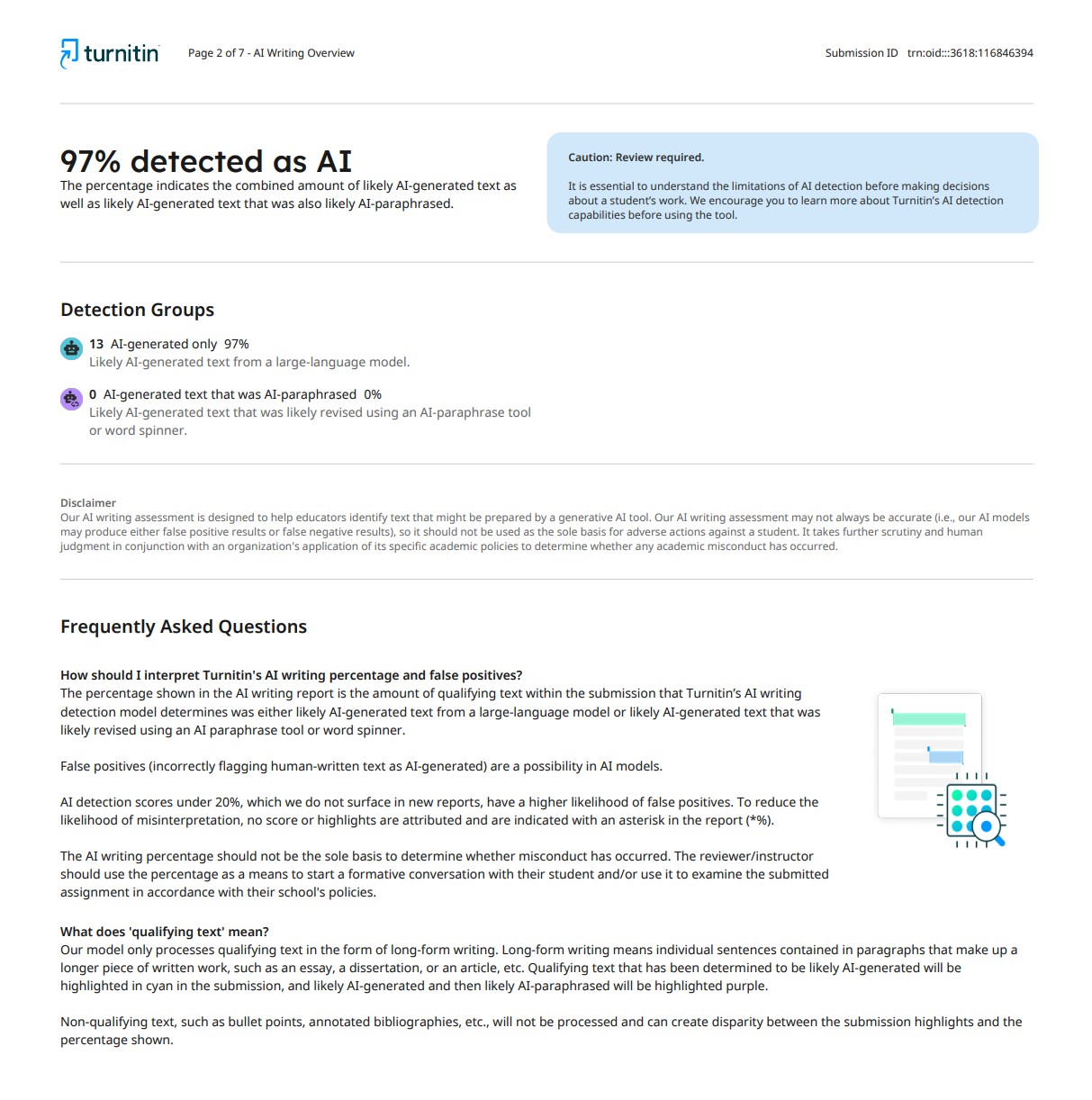

The Second Scan: Today, October 16, 2025

Out of curiosity, and while preparing materials for my client, I decided to re-upload the exact same document today. I changed nothing—not a single word, comma, or space. The result was shocking:

My jaw dropped. How could a document go from 0% to a staggering 97% AI-generated in less than a week? This isn't a minor discrepancy; it's a complete reversal that puts the tool's credibility into serious question.

This drastic change leads me to suspect that Turnitin may have rolled out an update to its detection algorithm between October 10th and today. While updates are meant to improve accuracy, this result suggests the opposite. It seems the new model might be overly aggressive, producing a massive "false positive."

As an educator who uses this to check students' work and as a student who is subject to these checks, the implications are terrifying:

- For Students: Imagine being a diligent student who spends weeks on a paper, only to be flagged as having cheated. An accusation of academic dishonesty, even if false, can have severe consequences on a student's career and mental well-being.

- For Educators: How can we confidently use a tool that produces such inconsistent results? It erodes our trust and forces us to second-guess every report, potentially leading to wrongful accusations or, conversely, letting actual AI-generated content slip through because we no longer trust the flags.

This isn't just a technical glitch; it's a critical issue that strikes at the heart of academic trust. If a tool designed to uphold integrity becomes unreliable, it becomes part of the problem.

I'm sharing this as a cautionary tale for fellow students, tutors, and professors. We need to be more critical of the tools we use. This experience has made it clear that we cannot blindly accept an AI detection score as gospel. It must be treated as just one piece of a much larger puzzle, with human judgment and open dialogue being the most important components.

Have you experienced something similar? Let's discuss this. The reliability of our academic tools depends on us holding them accountable.